Building compositional tasks with shared neural subspaces

Building compositional tasks with shared neural subspaces

Monkeys

Two adult male rhesus macaques (Macaca mulatta) participated in the experiment. The number of monkeys (2) follows previous work using similar approaches21. Monkeys Si and Ch were between 8 and 11 years old and weighed approximately 12.7 and 10.7 kg, respectively. All of the experimental procedures were approved by the Princeton University Institutional Animal Care and Use Committee (protocol, 3055) and were in accordance with the policies and procedures of the National Institutes of Health.

Behavioural task

Each trial began with the monkeys fixating on a dot at the centre of the screen. During a fixation period lasting 500 ms–800 ms, the monkeys were required to keep their gaze within a circle with a radius of 3.25 degrees of visual angle around the fixation dot. After the fixation period, the stimulus and all four response locations were simultaneously displayed.

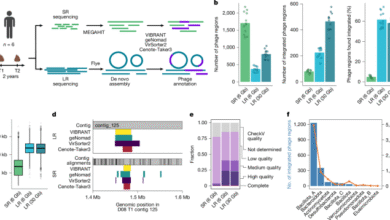

Stimuli were morphs consisting of both a colour and shape (Fig. 1a). The stimuli were rendered as three-dimensional models using POV-Ray and MATLAB (MathWorks) and displayed using Psychtoolbox on a Dell U2413 LCD monitor positioned 58 cm from the animal. Stimuli were morphed along circular continua in both colour and shape (that is, drawn from a four-dimensional ‘Clifford’ torus; Fig. 1b). Colours were drawn from a photometrically isoluminant circle in the CIELAB colour space, connecting the red and green prototype colours. Shapes were created by circularly interpolating the parameters defining the lobes of the ‘bunny’ prototype to the parameters defining the corresponding lobes of the ‘tee’ prototype. The mathematical representation of the morph levels adhered to the equation ({X}_{1}^{2}+{X}_{2}^{2}+{X}_{3}^{2}={P}^{2}) where X represents the parameter value in a feature dimension (for example, L, a, b values in CIELAB colour space).

We chose the radius (P) to ensure sufficient visual discriminability between morph levels. The deviation of each morph level from prototypes (0% and 100%) was quantified using percentage, corresponding to positions on the circular space from −π to 0 and 0 to π. Morph levels were generated at eight levels: 0%, 30%, 50%, 70%, 100%, 70%, 50%, 30%, corresponding to 0, π/6, π/2, 5π/6, π, −5π/6, −π/2 and −π/6, respectively. 50% morph levels for one feature were only generated for prototypes of the other feature (that is, 50% colours were only used with 0% or 100% shape stimuli and vice versa). Stimuli were presented at fixation and had a diameter of 2.5 degrees of visual angle.

The monkeys indicated the colour or shape category of the stimulus by saccading to one of the four response locations, positioned 6 degrees of visual angle from the fixation point at 45, 135, 225 and 315 degrees, relative to the vertical line. The reaction time was taken as the moment of leaving the fixation window relative to the time of stimulus onset. Trials with a reaction time below 150 ms were terminated, followed by a brief timeout (200 ms). Correct responses were rewarded with juice, while incorrect responses resulted in short timeouts lasting 1 s for monkey Ch and 5 s for monkey Si. After the trial finished, there was an intertrial interval lasting 2–2.5 s before the next trial began.

The animals performed three category-response tasks (Fig. 1c). The S1 task required the monkeys to categorize the stimulus by its shape. For a stimulus with a shape that was closer to the ‘bunny’ prototype, the animals had to make a saccade to the UL location to get rewarded. For a stimulus with a shape closer to the ‘tee’ prototype, the animals had to make a saccade to the LR location to be rewarded. For ease of notation, we refer to the combination of the UL and LR target locations as axis 1. The C1 task required the monkeys to categorize the stimulus by its colour. When a stimulus’ colour was closer to ‘red’, the animals made an eye movement to the LR location and when it was closer to ‘green’ the animals made an eye movement to the UL location.

Finally, in the C2 task, the monkeys again categorized stimuli based on their colour but responded to the UR location for red stimuli and the LL location for green stimuli. Together, the UR and LL targets formed axis 2. This set of three tasks was designed to be related to one another: the C1 and C2 tasks both required categorizing the colour of the stimulus while the C1 and S1 tasks both required responding on axis 1.

The monkeys were not explicitly cued as to which task was in effect. However, they did perform the same task for a block of trials allowing the animal to infer the task based on the combination of stimulus, response and reward feedback. Tasks switched when the monkeys’ performance reached or exceeded 70% on the last 102 trials of task S1 and task C1 or the last 51 trials of task C2. For monkey Si, block switches occurred when their performance reached or exceeded the 70% threshold for all morphed and prototype stimuli in the relevant task dimension independently. For monkey Ch, block switches occurred when their average performance at each morph level in the relevant task dimension exceeded the 70% threshold (that is, average of all 30% morphs, average of all 70% morphs, and 0% and 100% prototypes in colour dimension were all equal or greater than 70% accuracy in the C1 and C2 tasks).

over, on a subset of recording days, to prevent monkey Ch from perseverating on one task for an extended period of time, the threshold was reduced to 65% over the last 75 trials for S1 and C1 tasks after the monkey had already done 200 or 300 trials on that block.

When the animal hit the performance threshold, the task switched. This was indicated by a flashing yellow screen, a few drops of reward and a delay of 50 s.

To ensure even sampling of tasks despite the limited number of trials each day, the axis of response always changed following a block switch. During axis 1 blocks, either S1 task or C1 task was pseudorandomly selected, interleaved with C2 task blocks. Pseudorandom selection within axis 1 blocks avoided three consecutive blocks of the same task, ensuring the animal performed at least one block of each task during each session. On average, animals performed of 560, 558 and 301 trials and 2.68, 2.77 and 5.4 blocks per day for the S1, C1 and C2 tasks, respectively. Task orders and trial conditions were randomized across trials within each session.

Monkeys Si and Ch underwent training for 36 and 60 months, respectively. Both animals were trained in the same order of tasks: S1, C2 and then C1. Each animal underwent exposure to every task manipulation. As all of the animals were allocated to a single experimental group, blinding was neither necessary nor feasible during behavioural training. Electrophysiological recordings began when the monkeys consistently executed five or more blocks daily. Further details on the behavioural methods and results have been previously reported19.

Congruent and incongruent stimuli

During the S1 and C1 tasks red-tee stimuli and green-bunny stimuli were ‘congruent’ as they required a saccade to the LR and UL locations, respectively, in both tasks. By contrast, stimuli in the red-bunny and green-tee portion of stimulus space were ‘incongruent’, as they required different responses during the two tasks (UL and LR in S1 task; LR and UL in C1 task, respectively). To ensure the animals performed the task well, 80% of the trials included incongruent stimuli. Note that, as the C2 task was the only one to use axis 2, there were no congruent or incongruent stimuli on those blocks.

Analysis of behavioural data

Psychometric curves plot the fraction of trials in which the animals classified a stimulus with a specific morph level as being a member of the ‘green’ category for the C1 and C2 tasks or the ‘tee’ category for the S1 task. The fraction of responses for a given morph level of the task-relevant stimulus dimension was averaged across all morph levels of the task-irrelevant dimension during the last 102/102/51 trials of the S1/C1/C2 task (for example, for the C1 task, the fraction of responses for 70% green stimuli were averaged across all shape morph levels). As the stimulus space was circular, we averaged the behavioural response for the two stimuli at each morph level on each side of the circle (Fig. 1b). Psychometric curves were quantified by fitting the mean datapoints with a modified Gauss error function (erf):

$$F=theta +lambda times left(mathrm{erf},(frac{x-mu }{sigma })+1right)/2,$$

where erf is the error function, θ is a vertical bias parameter, λ is a squeeze factor, μ is a threshold, and σ is a slope parameter. Fitting was done in MATLAB with the maximum likelihood method (fminsearch.m function).

To calculate performance during task discovery (Fig. 1f), we used a sliding window of 15 trials, stepped 1 trial, to calculate a running average of performance immediately after a switch or right before a switch. Performance was estimated using trials from all blocks of the same task, regardless of the identity of the stimulus. To test whether behavioural performance differed significantly between the C1–C2–C1 and S1–C2–C1 sequences during task discovery (Fig. 4a; S1–C2–S1 or C1–C2–S1 in Extended Data Fig. 8a and C1–C2 or S1–C2 in Extended Data Fig. 8b), we applied χ2 test using the chi2test.m function as implemented previously59.

To compare distribution of task performance after switch and before switch for C1 and S1 tasks (Extended Data Fig. 2e,f), we computed behavioural performance in first 15 trials after switch and last 15 trials before switch for each block, respectively.

To compare colour categorization performance based on the stimulus shape morph level in C1 and C2 tasks (Extended Data Fig. 2c), for each colour morph level, we computed colour categorization psychometric curves using combination of each colour morph level and three sets of shape morph levels: ambiguous, 50% and 150% (that is, −π/2); intermediate, 30%, 70%, 130% (that is, −5π/6) and 170% (that is, −π/6); and prototype, 0% and 100%.

Surgical procedures and electrophysiological recordings

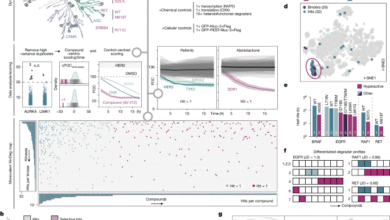

Monkeys were implanted with a titanium headpost to stabilize their head during recordings and two titanium chambers (19 mm diameter) placed over frontal and parietal cortices that provided access to the brain. Chamber positions were determined using 3D model reconstruction of the skull and brain using structural MRI scans. We recorded neurons from the LPFC (Brodmann area 46 d and 46 v, 480 neurons), aIT (area TEa, 239 neurons), PAR (Brodmann area 7, 64 neurons), the FEF (Brodmann area 8, 149 neurons) and the striatum (STR, caudate nucleus, 149 neurons). The number of monkeys and the number of neurons recorded per region were chosen to follow previous work using similar approaches38.

Two types of electrodes were used during recordings. To record from the LPFC and FEF we used epoxy-coated tungsten single electrodes (FHC). Pairs of single electrodes were placed in a custom-built grid with manual micromanipulators that lowered electrode pairs using a screw. This enabled us to record 20–30 neurons simultaneously from cortical areas near the surface. To record from deeper regions (PAR, aIT and STR) we used 16ch or 32ch Plexon V-Probes (Plexon). These probes were lowered using the same custom-built grid through guide tubes. During lowering, we used both structural MRI scans and the characteristics of the electrophysiological signal to track the position of the electrode. A custom-made MATLAB GUI tracked electrode depth during the recording session and marked important landmarks until we found the brain region of interest.

Recordings were acute; up to 50 single electrodes and three V-Probes were inserted into the brain each day (in some recording sessions one additional V-Probe was also inserted in the LPFC). Single electrodes and V-probes were lowered though the intact dura at the beginning of recording session and allowed to settle for 2–3 h before recording, improving the stability of recordings. Neurons were recorded without bias. Electrodes were positioned to optimize the signal-to-noise ratio of the electrophysiological signal without consideration of neural type or selectivity. Experimenters were blinded to experimental conditions while recording neurons. We did not simultaneously record from all five regions in all recording sessions. We began recording from the LPFC and FEF for the first 5–10 days and then added PAR, STR and aIT on successive days.

Broadband neural activity was recorded at 40 kHz using a 128-channel OmniPlex recording system (Plexon). We performed 15 recording sessions with monkey Ch and 19 recording sessions with monkey Si. After all recordings were complete, we used electrical microstimulation in monkey Si, and structural MRI and microstimulation in monkey Ch, to identify the FEF. Electrical stimulation was delivered as a train of anodal-leading biphasic pulses with a width of 400 µs and an interpulse frequency of 330 Hz. A site was identified as the FEF when electrical microstimulation of around 50 µA evoked a stereotyped eye-movement on at least 50% of the stimulation attempts. In monkey Ch, untested electrode locations were classified as FEF if they fell between two FEF sites (as confirmed with electrical stimulation) in a region that was confirmed as being FEF using MRI.

Eye position was recorded at 1 kHz using an Eyelink 1000 Plus eye-tracking system (SR Research). Sample onset was recorded using a photodiode attached to the screen. Eye position, photodiode signal and behavioural events generated during the task were all recorded alongside neural activity in the OmniPlex system.

Signal preprocessing

Electrophysiological signals were filtered using a 4-pole high pass 300 Hz Butterworth filter. To reduce common noise, the median of the signals recorded from all single electrodes in each chamber was subtracted from the activity of all single electrodes. For V-Probe recordings, we subtracted the median activity for all channels along the probe from each channel. To detect spike waveforms, a 4σn threshold was used where σn is an estimate of s.d. of noise distribution of signal x defined as:

$${sigma }_{n}=mathrm{median},left(frac{|x|}{0.6745}right)$$

Timepoints at which the electrophysiological signal (x) crossed this threshold with a negative slope were identified as putative spiking events. Spike waveforms were saved (total length was 44 samples, 1.1 ms, of which 16 samples, 0.4 ms, were pre-threshold). Repeated threshold crossing within 48 samples (1.2 ms) was excluded. All waveforms recorded from a single channel were manually sorted into single units, multiunit activity or noise using Plexon Offline Sorter (Plexon). Units that were partially detected during a recording session were also excluded. Experimenters were blinded to the experimental conditions while sorting waveforms into individual neurons. All analyses reported in this Article were performed on single units.

For all reported electrophysiology analysis, saccade time was calculated as the moment at which the instantaneous eye speed exceeded a threshold of 720 degrees of visual angle per second. Instantaneous eye speed was calculated as (sqrt{{left(frac{{rm{d}}x}{{rm{d}}t}right)}^{2}+{left(frac{{rm{d}}y}{{rm{d}}t}right)}^{2}}), where x and y are the position of the eye on the monitor at time t.

Statistics and reproducibility

Independent experiments were performed on two monkeys and the data were combined for subsequent analyses. As described below, statistical tests were corrected for multiple comparisons using two-tailed cluster correction unless stated otherwise. Unless otherwise noted, nonparametric tests were performed using 1,000 iterations; therefore, exact P values are specified when P > 0.001. Unless stated otherwise, all data were smoothed with a 150 ms boxcar. To compare the onset timing differences between within and shared-colour representations across brain regions (Fig. 2g and Extended Data Fig. 6d), classifier accuracy for each region was smoothed using a 50 ms boxcar filter.

All analyses were performed in MATLAB 2021b (MathWorks).

Using cluster mass to correct for multiple comparisons

To assess the significance of observed clusters in the time series data, we used a two-tailed cluster mass correction method60. This approach is particularly useful when dealing with multiple comparisons and helps identify clusters of contiguous timepoints that exhibit statistically significant deviations from the null distribution.

We first generated a null distribution (NullDist) by shuffling the observed data, breaking the relationship between the neural signal and the task parameter of interest (details of how data were shuffled are included with each test below). The observed data were also included as a ‘shuffle’ in the null distribution and the z-score of the null distribution for each timepoint was calculated. To define significant moments in time, we computed the upper and lower thresholds based on the null distribution. The thresholds were determined non-parametrically using percentiles. The resulting thresholds, denoted as Pthreshold upper and Pthreshold lower, serve as critical values for identifying significant deviations in both tails.

Timepoints of significant signal were identified by finding the moments when the value in each shuffle within the null distribution or the observed data exceeded the computed thresholds. These timepoints were then clustered in time, such that contiguous values above the threshold were summed together. The sum was calculated on the z-transformed values of each time series. This resulted in a mass of each contiguous cluster in the data. To correct for multiple comparisons, we took the maximum absolute value of the cluster mass across time for each shuffle. This resulted in a distribution of maximum cluster masses. Finally, the two-tailed P value of each cluster in the observed data was determined by comparing its mass to the distribution of maximum cluster masses in the null distribution.

FR calculation

In all analyses, we estimated the FR of each neuron by averaging the number of action potentials within a 100 ms window, stepping the window every 10 ms. Changing the width of the smoothing window did not qualitatively change our results. The time labels in the figures denote the trailing edge of this moving window (that is, 0 ms would be a window from 0 ms to 100 ms).

GLM

To estimate how individual neurons represented task variables, we used a generalized linear model (GLM) to relate the activity of each neuron (y) to the task variables at each moment of time. The full model was:

$$y={beta }_{0}+{beta }_{1}times mathrm{stimulus},mathrm{colour},mathrm{category}+{beta }_{2}times mathrm{stimulus},mathrm{shape},mathrm{category}+{beta }_{3}times mathrm{time}+{beta }_{4}times mathrm{task},mathrm{identity}+{beta }_{5}times mathrm{motor},mathrm{response},mathrm{direction}+{beta }_{6}times mathrm{reward}$$

where y is the FR of each neuron, normalized to the maximum FR across all trials, and β0, β1, …, β6 are the regression coefficients corresponding to each predictor. Predictors were: stimulus colour category, indicating the colour category of the stimulus (categorical variable); stimulus shape category, indicating the shape category of the stimulus (categorical variable); time, indicating the temporal progression within a recording session, normalized between 0 and 1 (continuous variable); task identity, indicating the identity of the task (categorical variable); motor response direction, indicating the direction of the motor response (categorical variable; UL, UR, LL or LR); reward, indicating whether a reward was received following the response (binary variable; 1 for reward, 0 for no reward).

The GLM coefficients (β) were estimated using maximum-likelihood estimation as implemented by MATLAB’s lassoglm.m function. Independent models were fit for each timepoint. To address potential overfitting, we applied Lasso (L1) regularization to the GLM weights with regularization coefficients (lambda) values of (0, 0.0003, 0.0006, 0.0009, 0.0015, 0.0025, 0.0041, 0.0067, 0.0111, 0.0183). In a separate set of runs, we fit the GLM models to 80% of data and tested on the remaining 20%. The lambda value with maximum R2 on the withheld data was used when estimating the CPD.

CPD calculation

To quantify the unique contribution of each predictor to a neuron’s activity, we used the CPD. This metric quantifies the percent of explained variance that is lost when a specific factor is removed from the full model. The CPD was computed by initially fitting the GLM with all the factors (full model) as described above. Each factor was then sequentially removed to create a set of reduced models, each of which were fit to the data. The CPD for each predictor X(t) was calculated as:

$$mathrm{CPD}(X

(1)

Balanced congruency: classifiers were trained on an equal number of congruent and incongruent trials, resulting in equal number of trials for four stimulus types: green-bunny, green-tee, red-bunny and red-tee. (2)

Balanced reward: classifiers were trained on an equal number of rewarded and unrewarded trials, balancing the stimulus identity of the relevant dimension on each side of the classifier. (3)

Balanced response direction: classifiers were trained on an equal number of trials from each response direction on the task’s response axis (for example, trials with response on axis 1 for the C1 task). This necessarily included error trials but balanced the number of each response direction on each side of the classifier. Classifying the colour category and shape category of stimuliColour and shape classifiers were trained to decode the stimulus colour category or shape category from the vector of activity across the pseudopopulation of neurons (Fig.

2a,b). A balanced number of congruent and incongruent stimuli were included in the training data to ensure colour and shape information could be decoded independently. Classifiers were trained for each task independently.Most classifiers were trained on correct trials alone. This maximized the number of trials included in the analysis and ensured the animals were engaged in the task. As many of our analyses were tested across tasks, this mitigates concerns that motor response information might confound stimulus category information. However, to ensure response direction did not affect our analyses, we controlled for motor response by balancing response direction in a separate set of analysis (Extended Data Figs. 4a and 5c,d). Although this significantly reduced the number of trials, and required us to include error trials, qualitatively similar results were often observed.

The total number of LPFC, STR, aIT, PAR and FEF neurons used for colour category classification was 403, 110, 195, 54 and 116, respectively. The total numbers of LPFC, STR, aIT, PAR and FEF neurons used for shape category classification were 480, 149, 239, 64 and 149, respectively.Classifying response directionA separate set of response classifiers were trained to decode the motor response from the vector of activity across the pseudopopulation of neurons. Response direction was decoded within each axis (for example, UL versus LR for axis 1). When training and testing on the same task (Fig. 2c), we included only trials that the animal responded on the correct axis (for example, axis 1 for the S1 and C1 tasks).For testing whether response direction could be decoded across axes (Extended Data Fig.

5d), we trained the classifier using trials from task C1, where the animal responded on axis 1, and then tested this classifier using trials from task C2, where the animal responded on axis 2, and vice versa. To control for stimulus-related information, we balanced rewarded and unrewarded trials for each condition and only included incongruent trials. This ensured that trials from the same stimulus category were present in both response locations. Similar results were seen when balancing for congruency and reward simultaneously, although the low number of incorrect trials for congruent trials resulted in a small set of neurons with the minimum number of train and test trials. The total number of LPFC, STR, aIT, PAR and FEF neurons used for response direction classification was 403, 110, 195, 54 and 116, respectively. Owing to limited number of trials, the total number of LPFC neurons for Extended Data Figs.

4b and 5d was 95 (only from animal Si).Using permutation tests to estimate the likelihood of classifier accuracyWe used permutation tests to estimate the likelihood the observed classifier accuracy occurred by chance. To create a null distribution of classifier performance, we randomly permuted the labels of training data 1,000 times. Importantly, only the task-variable of interest was permuted—permutations were performed within the set of trials with the same identity of other (balanced) task variables. For example, if trials were balanced for reward, we shuffled labels within correct trials and within incorrect trials, separately. This ensured that the shuffling broke only the relationship between the task-variable of interest and the activity of neurons. Shuffling of the labels was performed independently for each neuron before building the pseudopopulation and training classifiers. Neurons that were recorded in the same session had identical shuffled labels.

To stabilize the estimate of the classifier performance, the performance of the classifier on the observed and each instance of the permuted data were averaged over 10-folds and 20 to 50 novel iterations of each classifier. The null distribution was the combination of the permuted and observed values (total n = 1,001). The likelihood of the observed classifier performance was then estimated as its percentile within the null distribution. As described above, we used cluster correction to control for multiple comparisons across time.Testing classification across tasksTo quantify whether the representation of colour, shape, or response direction generalized across tasks (Fig. 2e,f,h,i), we trained classifiers on trials from one task and then tested the classifier on trials from another task.

For example, to test cross-generalization of colour information across the C1 and C2 tasks, a colour classifier was trained on trials from the C2 task was tested on trials from the C1 task (Fig. 2f). To remove any bias due to differences in baseline FR across tasks, we subtracted the mean FR during each task from all trials of that task (at each timepoint). Similar results were observed when we did not subtract the mean FR.Cross-temporal classificationTo measure how classifiers generalized across time, we trained classifiers to discriminate colour category (Fig. 2e and Extended Data Fig. 5b) or response direction (Fig. 2h) using 100 ms time bins of FR data, sliding by 10 ms.

Classifiers trained on each time bin were then tested on all time bins of the test trials.Projection onto the encoding axis of classifierTo visualize the high-dimensional representation of task variables, we used the MATLAB predict.m function to project the FR response on test trials onto the one-dimensional encoding space defined by the vector orthogonal to the classification hyperplane. In other words, the projection measures the distance of the neural response vector to the classifier hyperplane. For example, to measure the encoding of each colour category in the C1 task, we projected the trial-by-trial FR onto the axis orthogonal to the hyperplane of the colour classifier trained during the C1 task.Quantifying the impact of task sequence on task discoveryThe task-discovery period was defined as the first 110–120 trials after a switch in the task.

The monkey’s performance increased during this period, as they learned which task was in effect (Fig. 1f). As described in the main text, the animal’s behavioural performance depended on the sequence of tasks (Fig. 4a) and we therefore analysed neural representations separately for two different sequences of tasks:

(1)

C1–C2–C1 and S1–C2–S1 block sequence (same task transition): the task on axis 1 (C1 or S1) repeated across blocks. As shown in the main text, monkeys tended to perform better during these task sequences, as if they were remembering the previous axis 1 task. (2)

S1–C2–C1 and C1–C2–S1 block sequence (different task transition): the task on axis 1 changed across blocks. As shown in the main text (Fig. 4a), monkeys tended to perform worse on these task sequences.

As we were interested in understanding how changes in neural representations corresponded to the animals’ behavioural performance, we divided the ‘different task transition’ sequences into two further categories: in ‘Low Initial Performance’ blocks, the animal’s behavioural performance during the first 25 trials of the block was less than 50%, while on the ‘high initial performance’ blocks, the performance was greater than 50%.Owing to constrains on number of neurons, different but overlapping population of neurons were used to quantify neural representations in C1–C2–C1 and S1–C2–C1 tasks sequences.Task belief representation during task discoveryTo measure the animal’s internal representation of the task (that is, their ‘belief’ about the task, as represented by the neural population), we trained a task classifier to decode whether the current task was C1 or S1.

The classifier was trained on neural data from the last 75 trials of each task block, when the animal’s performance was high (reflecting the fact that the animals were accurately estimating the task at the end of the block). The number of congruent and incongruent trials were balanced in the training dataset (32 trials: 4 trials for each of the four stimulus types, in each task). We included all C1 blocks regardless of their task sequence in training set. Only correct trials were used to train and test the classifier. To minimize the effect of neural response to visual stimulus, the classifier was trained on neural activity from the fixation period (that is, before stimulus onset).

As we were interested in measuring differences between tasks, we did not subtract the mean firing rate before training the classifier.The task classifier was tested on trials from the beginning of blocks of the C1 task. Test trials were drawn from windows of 40 trials, slid every 5 trials, during learning (starting from trial 1–40 to trial 71–110). Overlapping test and train trials from the same task were removed. In contrast to training set, testing was done separately for S1–C2–C1 and C1–C2–C1 task sequences (Fig. 4d). As we were interested in focusing on the learning of the C1 task, we only used S1–C2–C1 task sequences with low initial performance (see above). Classifiers were tested on pseudopopulations built from trials within each trial window, with a minimum of four test trials (one trial for each of the four stimulus congruency types from task C1).

Neurons that did not include the required number of test trials for all trial windows were dropped. Note that, as we were using a small moving window of trials during task discovery, we had to trade-off the number of included neurons and the number of train/test trials for this analysis. over, although the number of correct trials increased as the animal discovered the task in effect, we kept a constant number of train and test trials in each of the sliding trial windows.To quantify the animal’s belief above the current task, we measured the distance to the hyperplane of the C1/S1 task classifier (task belief encoding). For Fig.

4d, we averaged the performance of the classifier in pre-stimulus processing window (−400ms to 0 ms).Correlation between task belief encoding and behavioural performanceTo calculate the correlation between the animal’s task belief during task discovery and their behavioural performance (Fig. 4e), we used Kendall’s τ statistic with a permutation test to correct for autocorrelation in the signal (detailed below). This measurement was performed using data from each window of trials and the belief encoding, as estimated from the task classifier, on that same window of trials. As we are working with pseudopopulations of neurons, we estimated the behavioural performance for each window of trials during learning as the average of behavioural performance across all the trials in the window. The behavioural performance for each trial was taken as the average of the animal’s behavioural performance during the previous 10 trials.

This yielded one average performance for each of the 16 trial windows. The task belief was measured for the same trials, using the average distance to the task classifier hyperplane, averaged over the time period from 400 ms to 0 ms before the onset of the stimulus (note that, as described above, all of these trials are withheld from the training data). Task belief was then taken as the average distance across all trials in a window of trials.Evolution of colour category representation during task discoveryWe were interested in quantifying how the shared colour representation was engaged as the animal discovered the C1 task (Fig. 4f,g). To this end, we trained a classifier to categorize colour using the last 75 trials of the C2 task, when the animal’s behavioural performance was high, and then tested it during discovery of the C1 task.

The classifier was trained on only correct trials and the training data were balanced for congruent and incongruent stimuli (16 trials: 4 trials for each of the four stimulus congruency types). This ensured an equal number of correct trials for each congruency type across all trial windows, controlling for motor response activity during the task discovery period. As cross-axis response decoding between the C1 and C2 tasks was weak (Extended Data Fig. 5d), cross-task classifiers are capturing the representation of the colour category that is shared between tasks.Similar to the task classifier described above, the shared colour classifier was tested using trials from the C1 task in a sliding window of 40 trials stepped 5 trials, in both the S1–C2–C1 and C1–C2–C1 sequences of tasks (Fig. 4f; as above, only low initial performance blocks were used for the S1–C2–C1 task sequences).

We used four trials to test the classifier (one trial for each of the four stimulus congruency types from task C1). For Fig. 4g, we averaged the performance of the classifier in stimulus processing window (100 ms to 300 ms).Evolution of shape category representation during task discoveryWe were interested in measuring the change in the representation of the stimulus’ shape category as the animal learned the C1 task (Fig. 4i). Our approach followed that of the colour category representation described above, and so we only note differences here. We trained a classifier to categorize stimulus shape based on neural responses during the S1 task (limited to the last 75 trials of each block). To ensure the classifier was only responding to shape (and not motor response), we trained the classifier on a balanced set of correct (rewarded) and incorrect (unrewarded) trials (16 trials: 4 trials for each reward condition and for each shape category).

The classifier was tested using trials from the C1 task (Fig. 4i). Note that we used the same C1 trials to quantify the representation of shared colour category, shape category and task belief for S1–C2–C1 task sequences. For Fig. 4j, we averaged the performance of the classifier in the window of time when stimuli were processed (100 ms to 300 ms after stimulus onset). The total numbers of LPFC neurons included for S1–C2–C1 and C1–C2–C1 task sequences to quantify the representation of shared colour category, shape category and task belief were 136 and 154, respectively.Evolution of response direction representation during task discoveryTo measure the change in response direction representation as the monkey’s learned the C1 task, we trained a classifier to categorize the response direction based on the neural response during the S1 task (Fig. 4k; last 75 trials of the block).

As above, we used a balanced number of correct and incorrect trials to control for information about the stimulus (12 trials: 3 trials for each reward condition and each response location). The classifier was then tested on correct trials from the C1 task during task learning (Fig. 4k; as above: sliding windows of 40 trials, stepped 5 trials, in S1–C2–C1 and C1–C2–C1; tested on 4 trials: 1 for each reward condition and each response location). For Fig. 4l, we averaged the performance of the classifier in the window of time when response location was processed (200 ms to 400 ms after stimulus onset). The total numbers of LPFC neurons included for S1–C2–C1 and C1–C2–C1 task sequences to quantify the representation of shared response direction were 120 and 155, respectively.Classifier statistical test to detect trends during task discovery periodTo quantify the statistical significance of trends in representations during task discovery (Figs.

4 and 5), we used trend-free prewhitening (TFPW)62,63, as implemented in MATLAB64. This method helps to reduce serial correlation in time-series data to obtain robust statistical inference in the presence of trends. TFPW first detrends the time series by removing Sen’s slope. It then prewhitens the time series by modelling autocorrelation with an autoregressive (AR) model (typically AR(1)) to produce residuals free of temporal dependencies. Finally, it adds back the original trend to generate processed time series. Both the Mann–Kendall statistics and Sen’s slope were used to estimate the significance of trends in the data.To ensure that our reported statistics are fully unbiased, we used the estimated Sen’s slope on prewhitened data using TFPW to estimate the P value of the observed data. To do so, we used permutation tests to estimate the likelihood the observed trend slope occurred by chance.

To create a null distribution of classifier performance, we randomly permuted the labels of test data 250 times across all trial windows (trials 1–110 for Fig. 4 and 1–120 for Fig. 5). To stabilize the estimate of the classifier performance, we tested classifiers using the same set trained classifiers (for example, use same set of classifiers trained to decode colour category in C2 task to test colour category decoding in C1 task during task discovery). Furthermore, the performance of the classifier on the observed and each instance of the permuted data were averaged over 10-folds and 50 novel iterations of each classifier. The slope was calculated for each timepoint across all time trial windows to estimate the trend for observed and permuted classifier performances. The null distribution was the combination of the permuted and observed slope values (total n = 251). The likelihood of the observed slope was then estimated as its percentile within the null distribution.

As described above, we used cluster correction to control for multiple comparisons across time.To measure rank correlation between two random variables (for example, correlation between task belief and behavioural performance, Fig. 4e), we used Kendall’s τ. As TFPW requires a monotonic time variable and cannot be applied here, we computed Kendall’s τ for both observed and permuted datasets. z-score values of observed data against permuted values were reported to account for autocorrelation inflation.Transfer of information between subspacesAs described in the main text, we were interested in testing the hypothesis that the representation of the stimulus colour in the shared colour subspace predicted the response in the shared response subspace on a trial-by-trial basis (Fig. 3c–e and Extended Data Fig. 7a–c).

To this end, we correlated trial-by-trial variability in the strength of encoding of colour and response along four different classifiers (using Pearson’s correlation as implemented in MATLAB’s corr.m function).First, as described above, a shared colour classifier was trained to decode colour category from the C2 task and tested on trials from the C1 task. Training data were balanced for correct and incorrect trials (rewarded and unrewarded trials, 16 trials: 4 trials for each reward condition and each colour category). Test trials were all correct trials (2 trials: 1 trial for each colour category). Both train and test trials were drawn from the last 50 trials from the block (to ensure the animals were performing the task well).

The total number of LPFC neurons included for this classifier was 63 (only from monkey Si, owing to constraints on the number of trials).Similarly, a second shared response classifier was trained on trials from the S1 task and then tested on the same set of test trials as the shared colour classifier.A third classifier was trained to decode the response direction on axis 2, using a balance of correct and incorrect trials from the C2 task. This classifier was tested on the same set of test trials as the shared colour classifier.Finally, a fourth classifier was trained to decode the shared colour representation but was now trained on correct trials from the C1 task (12 trials: 3 trials for each of the four stimulus congruency types) and tested on correct trials from C2 task (2 trials: 1 trial for each colour category).

The total number of LPFC neurons included for this classifier was 101 (only from animal Si, owing to constraints on the number of trials).To account for the arbitrary nature of positive and negative labels, we calculated the magnitude of encoding by flipping the encoding for negative labels. All four classifiers were trained on 2,000 iterations of training set trials. Note, that the first three classifiers were tested on the same test trials, allowing for trial-by-trial correlations to be measured. Furthermore, all four classifiers were trained over time, enabling us to measure the cross-temporal correlation between any pairs of classifiers.Together, these four classifiers allowed us to test three hypothesized correlations that reflect the transfer of task-relevant stimulus information into representations of behavioural responses.

First, one might expect that, on any given trial before the start of saccade, the strength and direction of the shared colour representation, as estimated by the distance to the hyperplane of the first classifier, should be correlated with the shared response, as estimated by the distance to the hyperplane of the second classifier. This correlation is seen in Fig. 3c.Second, during the performance of the C1 task, one would expect that before the start of saccade the shared colour representation should not be correlated with the response on the Axes 2 predicted by the C2 task. This is quantified by correlating the distance to the hyperplane of the first classifier and the distance to the hyperplane of the third classifier, as seen in Fig. 3d.However, one would expect these representations to be correlated when the animal is performing the C2 task.

This is quantified by correlating the distance to the hyperplane of the fourth classifier and the distance to the hyperplane of the third classifier, as seen in Extended Data Fig. 7a.Distance along colour and shape encoding axes during the discovery of the taskTo understand how the geometry of the neural representation of the colour and shape of the stimulus evolved during task discovery (Fig. 5), we measured the distance in neural space between the two prototype colours (red and green) and the two prototype shapes (tee and bunny). Distance was measured along the encoding axis for each stimulus dimension (that is, the axis that is orthogonal to the colour and shape classifiers, described above).

So, for each test trial, the distance along the colour encoding axis was:$$begin{array}{l}mathrm{colour},mathrm{encoding},mathrm{distance} ,=,mathrm{Avg},left(begin{array}{l}mathrm{abs}(mathrm{encoding}(mathrm{red},mathrm{bunny})-mathrm{encoding}(mathrm{green},mathrm{bunny})), mathrm{abs}(mathrm{encoding}(mathrm{red},mathrm{tee})-mathrm{encoding}(mathrm{green},mathrm{tee}))end{array}right),;end{array}$$This approach enabled us to calculate the distance along red/green colours while controlling for differences across shapes. The colour encoding distance was estimated for 250 iterations of the classifiers, enabling us to estimate the mean and standard error of the distance. A similar process was followed for estimating the shape encoding distance.To calculate the colour and shape encoding distance during task discovery (Fig.

5a,b), we calculated the distance above in the sliding window of 45 trials, stepped 5 trials, after the switch into the C1 task during the S1–C2–C1 sequences of tasks with low initial performance.CPICPI was defined as the log of the ratio of average colour encoding distance and average shape encoding distance described above:$$mathrm{CPI}=log ,left(frac{mathrm{avg}(mathrm{Colour},mathrm{encoding},mathrm{distance})}{mathrm{avg}(mathrm{Shape},mathrm{encoding},mathrm{distance})}right)$$To calculate the CPI for each task (Fig. 5c), we computed the shape and colour encoding distance using all trials of a given task. To measure the shape distance for all three tasks, we trained a classifier to categorize stimulus shape based on neural responses during the S1 task (limited to the last 75 trials of each block).

To ensure that the classifier was responding only to shape (and not motor response), we trained the classifier on a balanced set of correct (rewarded) and incorrect (unrewarded) trials (20 trials: 5 trials for each reward condition and for each shape category). Shape encoding for each task was calculated using four trials to test the classifier (one trial for each of the four stimulus congruency types).To measure colour distance in the S1–C2–C1 task, we trained colour category classifier on correct trials of the C1–C1–C2 task, balancing for congruent and incongruent stimuli (20 trials: 5 trials for each of the four stimulus congruency types).

Colour encoding for each task was calculated using four trials of that task to test the classifier (one trial for each of the four stimulus congruency types).To track changes in the relative strength of colour and shape information during task discovery, we calculated CPI in a sliding window of 45 trials (slid every 5 trials starting from trial 1–45 to trial 76–120) after the monkey’s switched into the C1 task (during the S1–C2–C1 sequences of tasks, with low initial performance). Note, as we controlled for motor response when calculating shape and colour distance, the CPI is not affected by motor response information65.Correlation between task belief encoding and CPITo correlate the CPI with task belief encoding, we used the same set of C1 test trials to calculate both CPI and estimate the task identity using the task classifier described above.

CPI values in 100 ms–300 ms after stimulus onset and belief encoding values in 400 ms to 0 ms before stimulus onset were averaged for all trials in each trial window and the Mann–Kendall correlation between the resulting two vectors was calculated (Fig. 5e).Quantifying suppression of axis representationWe trained a response axis classifier to decode whether the current task axis was axis 1 or axis 2. The classifier was trained on neural data from all trials of C1 and C2 tasks with an equal number of trials from each response direction on task specific axis (for example, equal trials for UL and LR response on axis 1 for C1 task, 36 trials: 9 trials for each response direction, in each task). All correct and incorrect trials were used to train and test the classifier. To capture the response period, the classifiers were trained on the number of spikes between 200 ms and 450 ms after stimulus onset.

As we were interested in measuring the difference in neural activity between axis of responses, we did not subtract the mean FR before training the classifier. For Extended Data Fig. 10d,f–i, the classifier was trained to decode response axis using S1 and C2 trials.To create a null distribution for classifier weights, we randomly permuted the response axis labels for a given response direction (1,000 iterations).Selectivity for axis of responseWe used the classifier β weights to group neurons according to their axis selectivity. Neurons with significantly negative β weights were categorized as selectively responding to axis 1. Neurons with significantly positive β weights were categorized as selectively responding to axis 2. Neurons without significant β weights were categorized as non-selective (Extended Data Fig. 10a).

To determine the significance of a neuron’s classifier weight, we compared the observed β weight to a null distribution (two-tailed permutation test).To quantify the suppression of neural activity for each category of neurons, we averaged FRs of neurons in each category during trials of the C1 task when the animal responded on axis 1 or axis 2 (Fig. 5g; Extended Data Fig. 10e shows the same analysis for the S1 task). This meant including all trials when the animal responded on axis 1 (both correct and error) and all trials when the animal responded on axis 2 (all errors). As the monkeys rarely responded on incorrect axis (Extended Data Fig.

2g), the number of trials was limited and so neurons without any error trials on axis 2 were excluded.Note that, although neurons were sorted by their activity during the animal’s response (200–450 ms after stimulus onset), we observed suppression across the entire trial (Fig. 5g). Furthermore, similar results were seen when a neuron’s axis selectivity was quantified on withheld trials (for example, in Extended Data Fig. 10d–i, axis selectivity was defined on S1 and C2 tasks and applied to C1 trials).Decoding response axis during the discovery of the taskWe trained a response axis classifier to decode whether the current task axis was axis 1 or axis 2.

The classifier was trained on neural data from the last 75 trials of the C1 and C2 tasks, when the monkey’s performance was high (reflecting the fact that the animals were accurately responding to the correct axis at the end of the block). The classifier was trained on an equal number of trials from each direction on the response axis (for example, equal trials for UL and LR response on axis 1 for C1 task; 36 trials: 9 trials for each response direction, in each task). Correct and incorrect trials were used to train and test the classifier. To remove the effect of stimulus processing, the classifier was trained on neural activity from the fixation period (that is, −400 to 0 ms before stimulus onset).

As we were interested in measuring differences between axis of responses, we did not subtract the mean FR before training the classifier.The task classifier was tested on trials from the beginning of blocks of the C1 task. Test trials were drawn from windows of 10 trials, slid every 3 trials, during task discovery (starting from trial 1–10 to trial 66–75). Overlapping test and train trials from the same task were removed. Classifiers were tested on pseudopopulations built from trials within each trial window (two trials: one trial for each response direction on axis one from task C1).Quantifying the angle between classifier hyperplanesTo quantify the similarity between the decision boundaries of classifiers trained on different tasks, we calculated the angle between the hyperplanes defined by their weight vectors. Each classifier produced a weight vector wi corresponding to the hyperplane that separates the data points according to the respective task.

We averaged the resulting hyperplane across resampling repetitions of trials (250 iterations).The angle (theta ) between two average hyperplanes, defined by weight vectors wi and wj, was calculated using the cosine similarity, which is given by:$$cos (theta )=frac{{{bf{w}}}_{i}cdot {{bf{w}}}_{j}}{Vert {{bf{w}}}_{i}Vert Vert {{bf{w}}}_{j}Vert }$$The angle θ was then obtained by taking the inverse cosine of the cosine similarity. Angles close to 0° indicate that the classifiers’ hyperplanes are nearly parallel, implying similar decision boundaries across the tasks. Conversely, angles close to 90° suggest orthogonal hyperplanes, indicating distinct decision boundaries.To measure the angle within the C1 task, we calculated the angle between classifiers trained to classify colour category on the last 50 trials of C1 task in both the C1–C2–C1 task sequence and the S1–C2–C1 task sequence.

This ensured that there were no overlapping trials between training samples. Only correct trials were used in this analysis and training and test trials were balanced for congruent and incongruent stimuli (20 trials: 5 trials for each of the four stimulus congruency types).To measure the angle between the colour category classifier in the C1 task and the C2 task, we trained the classifier on last 50 trials of task C1 and task C2.TDR analysisThe TDR analysis requires multiple steps21. Here, we describe each step in turn.Linear regressionWe used a GLM to relate the activity of each neuron (y) to the task variables at each moment of time.

The full model was:$$begin{array}{l}y={beta }_{0}+{beta }_{1}times mathrm{stimulus},mathrm{colour},mathrm{morph},mathrm{level}+{beta }_{2} ,times ,mathrm{stimulus},mathrm{shape},mathrm{morph},mathrm{level}+{beta }_{3}times mathrm{time}+{beta }_{4}times mathrm{task},{rm{S}}1+{beta }_{5} ,times ,mathrm{task},{rm{C}}1+{beta }_{6}times mathrm{task},{rm{C}}2+{beta }_{7} ,times ,mathrm{motor},mathrm{response},mathrm{direction},mathrm{on},mathrm{axis},1+{beta }_{7} ,times ,mathrm{motor},mathrm{response},mathrm{direction},mathrm{on},mathrm{axis},2+{beta }_{9}times mathrm{reward}end{array}$$where y is the FR of each neuron, z scored by subtracting the mean response from the FR at each timepoint and dividing it by the s.d. The mean and s.d. were computed across all trials and timepoints for each neuron.β0, β1, …, β9 are the regression coefficients corresponding to each predictor.

Predictors were: stimulus colour morph level, coded as −1 for 0% morph level, −0.5 for 30% and 170% morph levels, +0.5 for 70% and 130% morph levels, and +1 for 100% morph level; stimulus shape morph level, coded as −1 for 0% morph level, −0.5 for 30% and 170% morph levels, +0.5 for 70% and 130% morph levels and +1 for 100% morph level; time, indicating the temporal progression within a recording session, normalized between 0 and 1 (continuous variable); task identity, indicating the identity of the task (categorical variable: S1, C1, C2); motor response direction on axis 1, indicating the direction of the motor response (categorical variable; −1 for UL, +1 for LR); motor response direction on axis 2, indicating the direction of the motor response (categorical variable; −1 for LL, +1 for UR); reward, indicating whether a reward was received following the response (binary variable; 1 for reward, 0 for no reward).The GLM coefficients (β) were estimated using maximum-likelihood estimation as implemented by MATLAB’s lassoglm.m function. Independent models were fit for each timepoint.

To address potential overfitting, we applied Lasso (L1) regularization to the GLM weights with regularization coefficients (lambda) values of 0.0015. Correct and incorrect trials were used in fitting the model.Population average responsesWe estimated the average response of the neural population to each task variable. To calculate the average neural response to each colour and shape morph level, we separately averaged trials for within each morph level (0, 30, 70, 100, 130, 170) for each task. To calculate the average neural response for each response direction, we separately averaged trials for within each response direction (UL, UR, LL or LR) for each task. For all task variables, we smoothed the resulting response in time with a 150 ms Boxcar.

Finally, we z-scored the smoothed average for a given unit by subtracting the mean across times and conditions, and by dividing the result by the corresponding s.d.PCAWe used principal component analysis (PCA) (as implemented in MATLAB, pca.m function) to find the dimensions in the state space that captured most of the variance of the population average response and to mitigate the effect of noise. We concatenated the average response across conditions to build matrix X of size (Ncondition × T) × Nunit, where Ncondition is the total number of conditions and T is the number of time samples. We used the first NPCA that captured 90% of the explained variance to define a de-noising matrix D of size Nunit × Nunit.Regression subspaceWe used the regression coefficients described above to identify dimensions in the state space containing task related variance.

We used four task variables to define this space: colour morph level, shape morph level, response direction on axis 1 and response direction on axis 2. For each of these task variables, we built coefficient vectors ({beta }_{v,t}(i)) corresponding to regression coefficient for task variable v, time t and unit i. We then denoised each variable vector by projecting it to the subspace spanned by NPCA principal components from the population average defined above.$${beta }_{v,t}^{{rm{PCA}}}=D{beta }_{v,t}$$We computed ({t}_{v}^{max }) for each task variable where norm of ({beta }_{v,t}^{mathrm{PCA}}) matrix had its maximum value. This defined the time-independent, denoised regression vectors.$${beta }_{v}^{max }={beta }_{v,{t}_{v}^{max }}^{mathrm{PCA}}$$With ({t}_{v}^{max }={mathrm{argmax}}_{t}parallel {beta }_{v,t}^{mathrm{PCA}}parallel ).

To compute orthogonal axes of colour, shape and response direction on axis 1 and response direction on axis 2, we orthogonalized the regression vectors ({{boldsymbol{beta }}}_{v}^{max }) using QR decomposition.Where the first four columns of Q are orthogonalized regression vectors ({{boldsymbol{beta }}}_{v}^{perp }) of four task variables that comprise the regression subspace.To then estimate the representation of task-related variables in the neural population, we projected the average population response (described above) for each colour morph level, shape morph level, and response direction onto these orthogonal axes.$${p}_{v,c}={{{boldsymbol{beta }}}_{v}^{perp }}^{T}{X}_{c}$$Where pv,c is the time series with length T for each morph level in a specific task.Note that because TDR orthogonalizes task features, it can control for motor response when estimating the neural response to colour and shape.CPI using TDRTo measure the distance between the two prototype colours (red and green) and the two prototype shapes (tee and bunny).

We first projected the average PSTH for each protype object onto the orthogonal encoding axes of colour and shape.$${p}_{mathrm{colour},mathrm{protoype}}={{{boldsymbol{beta }}}_{mathrm{colour}}^{perp }}^{T}{X}_{mathrm{prototype}}$$$${p}_{mathrm{shape},mathrm{protoype}}={{{boldsymbol{beta }}}_{mathrm{shape}}^{perp }}^{T}{X}_{mathrm{prototype}}$$Distance was measured along the orthogonal encoding axis of shape and colour using projected responses:$$mathrm{colour},mathrm{encoding},mathrm{distance}=mathrm{Avg},left(begin{array}{c}mathrm{abs}left({p}_{mathrm{colour},text{red bunny}}-{p}_{mathrm{colour},text{green bunny}}right), mathrm{abs}left({p}_{mathrm{colour},text{red tee}}-{p}_{mathrm{colour},text{green tee}}right)end{array}right)$$$$mathrm{shape},mathrm{encoding},mathrm{distance}=mathrm{Avg}left(begin{array}{c}mathrm{abs}left({p}_{mathrm{shape},text{red bunny}}-{p}_{mathrm{shape},text{red tee}}right), mathrm{abs}left({p}_{mathrm{shape},text{green bunny}}-{p}_{mathrm{shape},text{green tee}}right)end{array}right)$$CPI was defined as the log of the ratio of the average colour encoding distance and average shape encoding distance as described above:$$mathrm{CPI}=log ,left(frac{mathrm{avg}(text{colour encoding distance})}{mathrm{avg}(mathrm{shape; encoding; distance})}right)$$Extended Data Fig.

9f reports the average CPI for 100 ms to 300 ms since stimulus onset.Quantifying the angle between colour category and response direction subspace across tasks using TDRTo quantify the angle between colour category subspace we used orthogonal task variable vectors defined by TDR.

Similar to fitting a regression model for TDR, we first fit a regression model that included separate colour, shape and response axis weights for each task.$$y={beta }_{0}+{beta }_{1}times {rm{C}}1,mathrm{task},mathrm{stimulus},mathrm{colour},mathrm{morph},mathrm{level}+{beta }_{2}times {rm{C}}2,mathrm{task},mathrm{stimulus},mathrm{colour},mathrm{morph},mathrm{level}+{beta }_{3}times {rm{S}}1,mathrm{task},mathrm{stimulus},mathrm{colour},mathrm{morph},mathrm{level}+{beta }_{4}times {rm{C}}1,mathrm{task},mathrm{stimulus},mathrm{shape},mathrm{morph},mathrm{level}+{beta }_{5}times {rm{C}}2,mathrm{task},mathrm{stimulus},mathrm{shape},mathrm{morph},mathrm{level}+{beta }_{6}times {rm{S}}1,mathrm{task},mathrm{stimulus},mathrm{shape},mathrm{morph},mathrm{level}+{beta }_{8}times {rm{S}}1,mathrm{task},mathrm{motor},mathrm{response},text{direction on},mathrm{axis},1+{beta }_{9}times {rm{C}}1,mathrm{task},mathrm{motor},mathrm{response},text{direction on},mathrm{axis},1+{beta }_{10}times {rm{C}}2,mathrm{task},mathrm{motor},mathrm{response},text{direction on},mathrm{axis},2+{beta }_{11}times mathrm{time}+{beta }_{12}times mathrm{task},{rm{S}}1+{beta }_{13}times mathrm{task},{rm{C}}1+{beta }_{14}times mathrm{task},{rm{C}}2+{beta }_{15}times mathrm{reward}.$$Models were fit on 200 resamples, each randomly drawing 75% of all trials for each individual task.

For ‘from switch’ conditions, the first 50 trials after switch to each task were used and, for ‘to switch’ conditions, the last 50 trials in the blocks were used.

Similar to TDR method described above, we used QR decomposition to find the orthogonal axes encoding each task variable, but now specific to each task (colour morph level, shape morph level, task identity, reward and task specific axis of response):$${B}^{max ,{rm{C}}1mathrm{task}}={Q}_{{rm{C}}1}{R}_{{rm{C}}1},{B}^{max ,{rm{C}}2mathrm{task}}={Q}_{{rm{C}}2}{R}_{{rm{C}}2},{B}^{max ,{rm{S}}1mathrm{task}}={Q}_{{rm{S}}1}{R}_{{rm{S}}1}$$For each resampling, the angle between orthogonal axis of colour of pairs of tasks (C1–C2, C1–S1 and C2–S1) was measured as$$cos (theta )=frac{{{boldsymbol{beta }}}_{mathrm{colour},mathrm{task},i}^{perp }cdot {{boldsymbol{beta }}}_{mathrm{colour},mathrm{task},j}^{perp }}{parallel {{boldsymbol{beta }}}_{mathrm{colour},mathrm{task},i}^{perp }parallel parallel {{boldsymbol{beta }}}_{mathrm{colour},mathrm{task},j}^{perp }parallel }$$Where i and j are task identity of the tasks.

Within-task angles (Extended Data Fig. 9c,d) were computed by randomly taking 200 pairs of resampling regression coefficient repetitions, and finding the orthogonal axes explained above. All angles were wrapped to 90 deg. This was because the process of orthogonalization with QR decomposition might result in vectors that are mathematically equivalent but with a flipped sign compared to the input vectors owing to numerical choices or conventions.We used a permutation test to assess whether the angle between pairs of tasks was significantly larger than expected by chance. The observed angle was estimated by fitting the regression model explained above to all trials. To compute a null distribution for angles, we generated 1,000 permuted datasets by randomly permuting the predictor values relative to the neural activity and refitting the model. For each permuted dataset, we computed the angle between task pairs as described above.

The likelihood of the observed angle was then estimated by computing the proportion of permuted datasets that yielded an angle smaller than or equal to that of the observed angle.Quantifying whether task representations transfer across blocksTo test whether the task representation was maintained across blocks (Fig. 4b), we trained a classifier to discriminate between C1 and S1 trials using the last 50 trials of the block. We then tested this classifier in three ways. First, to quantify the information about the identity of C1 versus S1 task, we tested this classifier on withheld trials from last 50 trials of C1 and S1 tasks (Fig. 4b (1)). Second, to quantify how much the representation of C1 and S1 tasks transferred to the C2 task, we tested whether the classifier could discriminate between C1 and S1 tasks during the first 50 trials of the C2 task (that is, comparing C1–C2 versus S1–C2).

Third, to quantify how much the representation of C1 and S1 tasks was transferred to C1 or S1 in the next block, we tested the classifier on the first 50 trials after the switch in C1 and S1 tasks.For all training and testing, the total number of spikes in the period −400 to 0 ms from stimulus onset for each trial were used to build a pseudopopulation. Only correct trials were included in this analysis, and the training and test trials were balanced for congruent and incongruent stimuli (36 trials: 9 trials for each of the four stimulus congruency types). To compute a null distribution, we generated 1,000 permuted datasets by randomly permuting all task identity values relative to the neural activity and refitting the classifier.Reporting summaryFurther information on research design is available in the Nature Portfolio Reporting Summary linked to this article.

■ مصدر الخبر الأصلي

نشر لأول مرة على: www.nature.com

تاريخ النشر: 2025-11-26 02:00:00

الكاتب: Sina Tafazoli

تنويه من موقع “yalebnan.org”:

تم جلب هذا المحتوى بشكل آلي من المصدر:

www.nature.com

بتاريخ: 2025-11-26 02:00:00.

الآراء والمعلومات الواردة في هذا المقال لا تعبر بالضرورة عن رأي موقع “yalebnan.org”، والمسؤولية الكاملة تقع على عاتق المصدر الأصلي.

ملاحظة: قد يتم استخدام الترجمة الآلية في بعض الأحيان لتوفير هذا المحتوى.